@adlrocha - A gentle introduction to VDFs

Verifiable Delay Functions

It was a while since I explored a new concept in the field of cryptography. And more so since the last time I wrote about cryptography in this newsletter (my last publications related to cryptography were my tutorial to Zokrates and the ZKP Game). Today I will share my notes of the brief research I did on a concept I’ve been hearing about for a while now and which is being widely explored by projects in the crypto space: Verifiable Delay Functions.

Disclaimer: This publication may end up being pretty unstructured as it is the result of the transcription of the notes I took in my “non-digital” notebook while researching VDFs. I’ll do my best.

The issues with randomness

“Verifiable Delay Functions are a way to slow things down verifiably”. This is the most illustrative definition of VDFs I’ve seen (is from this awesome talk, by the way). But why would we want to slow things down? What is the purpose for these brand new primitives? The answer is randomness.

Finding randomness on the blockchain is hard. Smart contract executions must be deterministic, but for certain applications a bit of randomness may come handy. To achieve this developers try to acquire sources of randomness from information in the network such as future block hashes, block difficulty, or timestamps. Unfortunately, all these schemes have a key limitation: everyone can observe how the choices they make affect the randomness generated on-chain. In this article you have a few examples of vulnerable implementations of randomness using block information as sources of entropy.

Along with smart contracts, randomness is also important for other critical parts in the operation of blockchain systems such as the election of leaders in proof-of-stakes protocols:

“Another related problem is electing leaders and validators in proof of stake protocols. In this case it turns out that being able to influence or predict randomness allows a miner to affect when they will be chosen to mine a block. There are a wide variety of techniques for overcoming this issue, such as Ouroboros’s verifiable secret-sharing scheme. However, they all suffer from the same pitfall: a non-colluding honest majority must be present.”

With these problems in mind, Boneh, et al. introduced the concept of verifiable delay functions (VDFs) in 2018.

“VDFs are functions that require a moderate amount of sequential computation to evaluate, but once a solution is found, it is easy for anyone to verify that it is correct.”

VDFs introduce a forced time delay to an output so that malicious actors can’t influence it by predicting future values. A valid VDF must have the following properties:

Sequential: Anyone can compute f(x) in t sequential steps, but no adversary with a large number of processors can distinguish the output of f(x) from random in significantly fewer steps. This is really important if we want to tackle the problems mentioned above. A malicious actor shouldn’t be able to distinguish the output from an intermediate state in the computation of the VDF giving him advantage over “what comes next”.

Efficiently verifiable: Given the output y, any observer can verify that y = f(x) in a short amount of time (specifically log(t)).

And an interesting note from this article about VDFs. Why a hash chain can’t be considered a VDF?

“Before jumping into VDF constructions, let’s examine why an “obvious” but incorrect approach to this problem fails. One such approach would be repeated hashing. If the computation of some hash function h takes t steps to compute, then using

f = h(h(...h(x)))as a VDF would certainly satisfy the sequential requirement above. Indeed, it would be impossible to speed this computation up with parallelism since each application of the hash depends entirely on the output of the previous one. However, this does not satisfy the efficiently verifiable requirement of a VDF. Anyone trying to verify thatf(x) = ywould have to recompute the entire hash chain. We need the evaluation of our VDF to take exponentially more time to compute than to verify.”

A simple construction

So what would be a good candidate for a VDF? Let’s follow the example from here. The specific VDF that we are going to build is an operation over a finite group of unknown order (i.e. over a set of numbers with a clear upper limit and a specific number of elements which is unknown). A good way of building a finite group is defining all operations over a set of numbers modulo N (where N can be something as fancy as the multiplication of two safe primes p and q).

With that in mind, our VDF could be represented as the following function:

y = [x^(2^T)] mod NWhere x is the input value, hashed into the group, T is the publicly known delay parameter and determines the duration of the delay, and y is the output. We are operating over the finite group, so we will drop the modulo N from now on (consider it implied).

With this construction, this simple function already fulfills one of the basic properties required for it to become a valid VDF candidate, it is sequential. At every step in the computation we need the previous operation for the next iteration as we don’t know the order of the group, making it serial. To compute x^2^T we will have to compute sequentially x^2^i with i from zero to T, as in every step we need to make sure that the result falls inside our finite the gorup. This is also the reason why we say T is the delay function, we need T steps to get the final output.

“The repeated squaring computation is not parallelizable and reveals nothing about the end result until the last squaring. These properties are both due to the fact that we do not know the order of

G. That knowledge would allow attackers to use group theory based attacks to speed up the computation.”

Ok, so we have a sequential function, now we need to make it efficiently verifiable so that a verifier doesn’t need to follow the full T steps again. This can be done by building a proof. For this example, the prover and the verifier will run an interactive protocol to perform the validation of the VDF.

To prove the VDF, the verifier needs to pick a random number, L, and send it to the prover. Now the prover divides 2^T by L to get the quotient q and the remainder r. He computes x^q and send it along y to the verifier.

The verifier now computes (2^T) mod L and checks that y is equal to (x^q)^L*x^r. If this is the case then it means that, indeed, the verifier has full knowledge of the output of the VDF and therefore has “suffered” the computation of the T steps. Lets summarize the interactive protocol here for clarity purposes:

Verifier:

Generates random L and sends to prover.

Prover:

Computes: (q, r) = (2^T)/L

Then: pi = x ^ q

Send to prover (y, pi)

Verifier:

Computes: r = (2^T) mod L

Check: y = pi^L * x^r

And voilá! VDF execution verified. This proof could also be built and verified using a non-interactive proof, but an external source of randomness would be required.

Where to learn more

There are several VDF candidates out there since Boneh’s proposal:

“There are currently three candidate constructions that satisfy the VDF requirements. Each one has its own potential downsides. The first was outlined in the original VDF paper by Boneh, et al. and uses injective rational maps. However, evaluating this VDF requires a somewhat large amount of parallel processing, leading the authors to refer to it as a “weak VDF.” Later, Pietrzak and Wesolowski independently arrived at extremely similar constructions based on repeated squaring in groups of unknown order.”

But the best resource without a doubt to learn about everything that is happening around VDFs is VDF research. I wish I had the time to read and watch all the impressive content included in this site (I would become an expert in VDFs :) ). Here you will find the latests papers, talks and a lot of wonderful knowledge about this field. Below you can see a figure of many subfields of VDFs.

Actually one of the fields that has ended up interesting me the most (after a glance in the content of VDF research) is the co-design hardware-software of VDFs. A good example of it can be found in this talk:

Let’s get practical

So you want to see VDFs in action, right? The easiest way I’ve found is to use this repo which includes a Rust implementation of Boneh’s VDF. First you need to install the tool as follows (be sure to have Rust installed in your machine before approaching these steps):

$ sudo apt-get install -y libgmp-dev

$ git clone https://github.com/poanetwork/vdf.git

$ cargo install --path=vdf-cliWith the tool installed, your are now able to run the VDF:

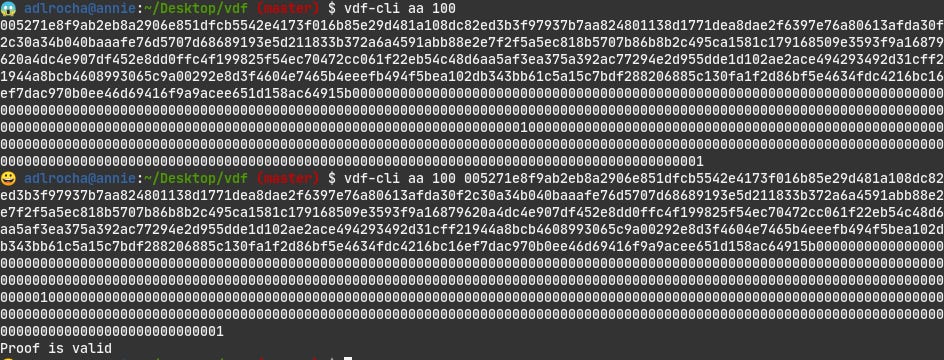

$ vdf-cli aa 100And verify its execution by pasting the output into the command as follows:

$ vdf-cli aa 100 005271e8f9ab2eb8a2906e851dfcb5542e4173f016b85e29d481a108dc82ed3b3f97937b7aa824801138d1771dea8dae2f6397e76a80613afda30f2c30a34b040baaafe76d5707d68689193e5d211833b372a6a4591abb88e2e7f2f5a5ec818b5707b86b8b2c495ca1581c179168509e3593f9a16879620a4dc4e907df452e8dd0ffc4f199825f54ec70472cc061f22eb54c48d6aa5af3ea375a392ac77294e2d955dde1d102ae2ace494293492d31cff21944a8bcb4608993065c9a00292e8d3f4604e7465b4eeefb494f5bea102db343bb61c5a15c7bdf288206885c130fa1f2d86bf5e4634fdc4216bc16ef7dac970b0ee46d69416f9a9acee651d158ac64915bA cool thing to try (if you have the time) is to increase the delay parameter (that we set to 100 in the above example) to something high such as 10000, and see how computing the VDF takes a significant amount of time, while verifying the result is performed almost instantly. But this is what VDFs are all about, right?

I still have a lot to learn about this cryptographic primitives and their potential use, but this first contact with them has been pretty fun. See you next week!

Some additional visual aide

Finally, I leave you two interesting videos that will definitely explain better than me what VDFs are all about. Enjoy!