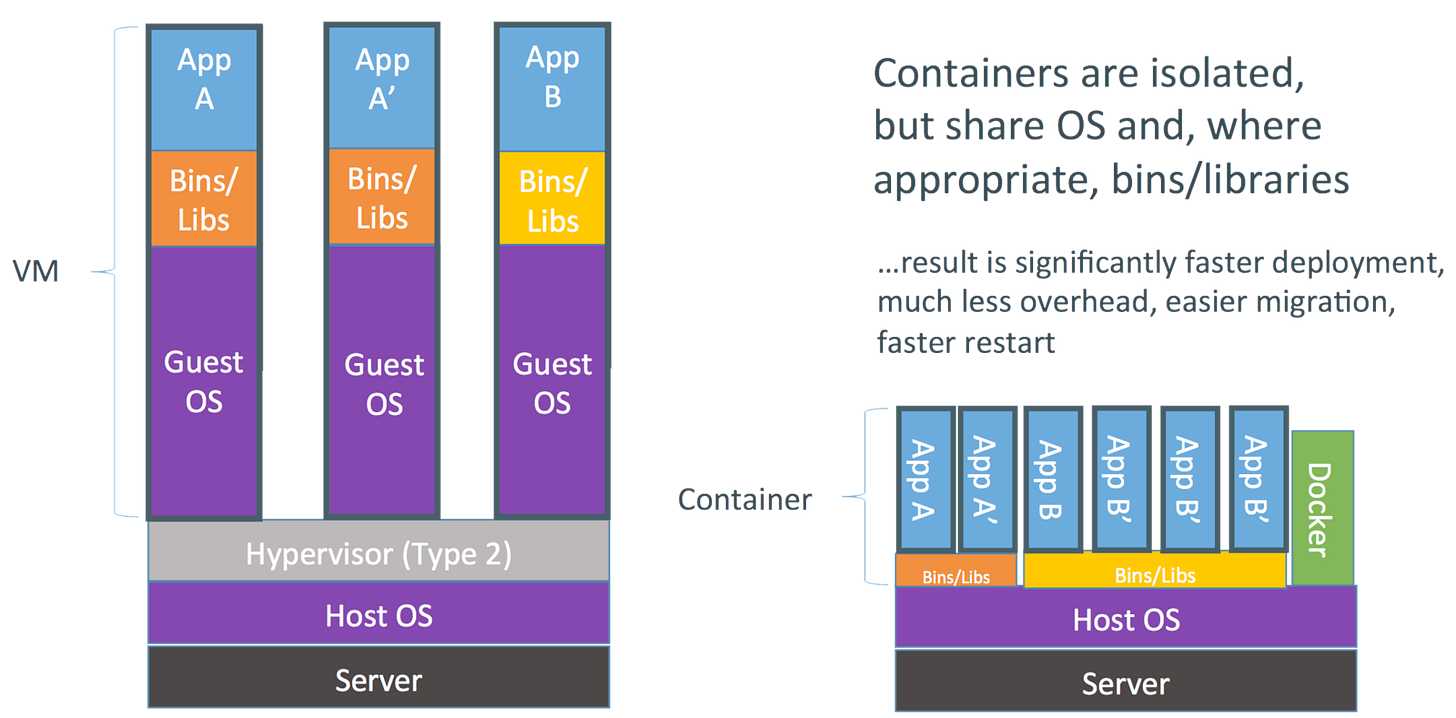

Cloud computing, microservices, serverless, scalable and affordable computing… all of this has been mainly possible by an outstanding piece of technology, Linux Containers (LXC). Linux containers (LXC) provide an OS-level virtualized sandbox. In a nutshell, containers allow you to run multiple isolated Linux systems on a single host. Using certain features from the Linux kernel, it partitions shared resources (memory, CPU, the filesystem) into isolation levels called “namespaces”. The container runs directly on the physical hardware with no emulation and very little overhead (asides from a bit of initialization to set up the namespace). The most popular tool using Linux containers today is Docker. Linux containers are different from a Virtual Machine, where VM management software (VirtualBox, VMware ESXi, etc.) emulates physical hardware, and the VM runs within that emulated environment.

LXC has been key for the development of cloud computing, but a new player —I’ve talked about before in this newsletter— has enter the game. Yes, I am referring to Web Assembly. I think I’ve copy-pasted WASM definitions a few times now, but I feel it is worth doing it one last time for the sake of clarity: “WebAssembly, is an open standard for a new binary format. By design, it is memory-safe, portable, and runs at near-native performance. Code from other languages can be cross-compiled to WebAssembly. Currently, there’s first-class support for Rust, C/C++ and AssemblyScript (a new language built for WebAssembly, compiled against a subset of TypeScript). Many other compilers are already in development”.

As we already know, WASM was originally designed for the browser, it was a way of replacing Javascript for computationally intensive applications in the browser, but the idea of having a cross-compiled binary format that could provide a fast, scalable and secure way of running the same code across all machines was pretty appealing. This is why WASI (the WebAssembly System Interface) was born (the 2019 announcement here). WASI is a new standard that extends the execution of WebAssembly to the OS. It introduces a new level of abstraction so that WASM binaries can be “compiled once, and run anywhere”, independently of the underlying platform. This is what got me excited about WASM last year, and what triggered the publication of this post in my newsletter.

The Inception

However, the other day I was developing a small Rust microservice and at the moment of deployment I started wondering, “wait a minute, WASM may also come pretty handy here”. Specifically, what I was developing was a simple server that listens to a set of webhooks and triggers actions in a database and other services according to the webhook triggered and their specific content. It was a stateless microservice, and I wanted it to be as lightweight as possible (thus, Rust). I was Dockerizing the service when I realized, “why can’t I compile my Rust microservice into WASM and run it as-is over my infrastructure as if it was a serverless function?” It was then when I started researching the use of WASM in serverless environments. Apparently, many have tried this before using AWS Lambdas and Azure Functions, but I hate vendor lock-in. I already use Kubernetes to manage my deployments (thus the Dockerization of my microservice), why couldn’t I run a raw WASM binaries, without additional virtualization, as if it was a Docker container over Kubernetes. This would allow LXC and WASM loads to coexist in my Kubernetes cluster, enabling me with the ability to deploy lightweight WASM (and fast to wake up, due to WASM binaries small size) functions and applications over Kubernetes combining a contenirized and severless approach in my infrastructure.

WASM in the cloud not only means fast starting up times for lightweight processes frequently slept and awaken, near native-code performance and lighter binaries (some of the reasons for its development in the first-place), but also a sandboxed runtime environment by design. WASM security model has two important goals: “(1) protect users from buggy or malicious modules, and (2) provide developers with useful primitives and mitigations for developing safe applications, within the constraints of (1)”. Something cloud security engineers have been trying to achieve in Docker for years.

Docker v.s. WASM

Deeper in my research, I realized I wasn’t the only one seeing the potential of WASM in the cloud, even Docker’s founder Solomon Hykes had already realized the impact the combination of WASM and WASI could have in cloud environments:

I highly recommend following the responses to the above tweet to find fine jewelry as this one:

And then I came across this Microsoft blog post and Krustelet’s announcement, the answers to my replies.

“Importantly, at a very high level WASM has two main features that the Kubernetes ecosystem might be able to use to its advantage:

WebAssemblies and their runtimes can execute fast and be very small compared to containers

WebAssemblies are by default unable to do anything; only with explicit permissions can they execute at all

These two features hit our sweet spot, which suggested to us that we might profitably use WASM with Kubernetes to work in constrained and security-conscious environments – places where containers have a harder time.”

Krustlet in action!

Krustlet has been designed to run as a Kubernetes Kubelet. The kubelet is the node agent that runs in every node of a cluster and is responsible for ensuring the correct execution of the loads requested. Krustlet is a kubelet written in Rust that listens on the Kubernetes API event stream for new WASM / WASI pods (I highly recommend DeisLab’s post on Krustlet, to understand how their team have been writing parts of Kubernetes —originally in Go— in Rust achieving more concise, readable, and stable code —it is settled, I have to make Rust my go-to language once I gain a bit more of proficiency—).

In the end, Krustlet was just what I was looking for to deploy my Rust microservice and test this idea of “WASM in the cloud” that many had came up with before. In order to test Krustlet, I followed their Kubernetes In Docker quick start guide. I faced several problems while setting up the default gateway (step 2). I have a Linux machine, and maybe I messed up in the previous step (setting up the certificates), because the solution to my issue was starting the tutorial from scratch again. Anyways, with krustlet deployed in my KinD, I tried the “hello-weold-wasi-rust” demo, and oh man! I got really f* excited!

I then compiled my Rust service into WASM and tried to deploy it using Krustlet. I wasn’t successful in my endeavor, but to be completely honest, I haven’t found yet the time to dedicate full straight hour on this, so I may have messed up in many ways in the process. Either way, I got really excited with the potential of this, and that’s the reason why I decided to write this first early publication introducing Krustlet (even when I haven’t managed yet to deploy my own WASM application over it). I’ll come back once I manage to deploy something aside from the Krustlet demo applications with a step by step guide, and new conclusions on the potential of this — I have a lot of ideas of things I want to test with Krustlet that could be huge, I wish I could make these experiments and development my full-time job (should I open a Patreon, comments are open)—.

A lot of work ahead

I would like to close with a reflection from Microsoft’s blog post:

“Both WebAssemblies and containers are needed

Despite the excitement about Wasm and WASI, it should be clear that containers are the major workload in K8s, and for good reason. No one will be replacing the vast majority of their workloads with WebAssembly. Our usage so far makes this clear. Do not be confused by having more tools at your disposal.

For example, WebAssembly modules are binaries and not OS environments, so you can’t simply bring your app code and compile it into a WASM like you can a container. Instead, you’re going to build one binary, which in good cloud-native style should do one thing, and well. This means, however, that WASM “pods” in Kubernetes are going to be brand new work; they likely didn’t exist before. Containers clearly remain the vast bulk of Kubernetes work.

Still for me the impact of having “specialized WASM services” running in the same infrastructure as container jobs — such as my lightweight Rust webhook listener — can have a huge impact to the ecosystem, in terms of comfort, security, ease of management, and efficiency.

WASMs can be packed very, very densely, however, so using WebAssembly might maximize the processing throughput for large public cloud servers as well as more constrained environments. They’re unable to perform any work unless granted the permissions to do so, which means organizations that do not yet have confidence in container runtimes will have a great possibility to explore. And memory or otherwise constrained environments such as ARM32 or other system-on-a-chips (SOCs) and microcontroller units (MCUs) may be now attachable to and schedulable from larger clusters and managed using the same or similar tooling to that which Kubernetes uses right now.”

And I love this last thing, and it is something I definitely want to try. With LXC you are limited to the execution of containers over architectures that support this (have you tried, for instance, Docker in Windows? I know…), but with WASM we open the door to the execution of virtual WASM environments over any kind of architecture (even if virtualization or containers are not supported). I don’t know about you, but the more I read and learn about WASM the more excited I become with the technology. I may be a bit biased, but who cares!

And for the sake of completion, one last video about the execution of WASM outside the browser:

See you next week!