@adlrocha - What if we add compression to libp2p?

A lot of decentralized applications could really benefit from it.

If you have been following me for a few months you know that I’ve been head down trying to improve file-sharing in IPFS and P2P networks in general. I started the project exploring Bitswap, one of the protocols used within IPFS for file-sharing. As a consequence of this initial exploration of Bitswap I decided to start lovingly referring to the project as “Beyond Bitswap”, not because the aim of the project is exclusively to improve Bitswap, but as a chronological consequence of my work. I needed a foundation and a baseline to start evaluating file-sharing in P2P networks, and what better than to start evaluating and testing new ideas in the exchange protocol of an already deployed p2p network with the infrastructure in place.

Throughout these few months of work we have explored different ideas to improve file-sharing (such as the use of network coding), and have shown how the naïve implementation of simple ideas in P2P environments may lead to extremely inefficient results (forcing us to use more complex data structures than the ones we may be used to —like HAMTs—).

As a result of these few months of work we have come up with ideas and proposals to improve Bitswap, and go “Beyond Bitswap” by exploring the implementation of new protocols and schemes. Consequently, today I want to share one of those ideas we evaluated within Bitswap but that can potentially allow us to go “Beyond Bitswap”.

HTTP uses compression, why not us?

Compression is already an important and convenient way to increase performance in Web 2.0. For certain files, we can reach size reductions of up to 70%, with the corresponding improvements in bandwidth requirements. Compression in the web happens at three different levels:

First, some file formats are compressed with specific optimized methods, such as specific video and image compression algorithms.

Then general encryption can happen at the HTTP level (the resource transmitted is compressed end-to-end).

And finally, compression can be defined at the connection level, between two nodes of an HTTP connection.

These last two points are the one that got me thinking: “are we currently using compression in any way in the exchange of files between nodes in IPFS and other P2P networks?” If HTTP is reaching significant improvements by using compression at a connection level, why can’t we also leverage this?

A quick overview of compression in HTTP

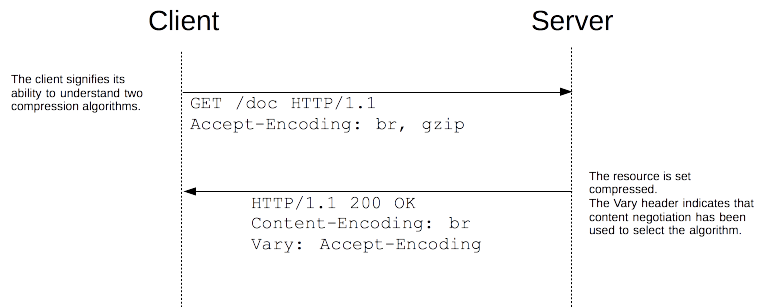

Nowadays there two relevant compression algorithms used within HTTP: gzip, the most common one, and br the new challenger. To select the algorithm to use, browsers and servers use proactive content negotiation. The browser sends an Accept-Encoding header with the algorithm it supports and its order of precedence, the server picks one, uses it to compress the body of the response and uses the Content-Encoding header to tell the browser the algorithm it has chosen [source]. The strategy that leads to better performance improvements is the use of end-to-end compression where the body of the HTTP message is compressed using the negotiated algorithm.

Another compression approach introduced in HTTP/2 is header compression. Specifically, HTTP/2 uses a header compression algorithm called HPACK (the analogous QPAK has also been proposed already for QUIC - HTTP/3). HPAK uses a set of dictionaries to encode the content of headers.

When HPACK needs to encode a header in the format name:value, it will first look in the static and dynamic dictionaries. If the fullname:value is present, it will simply reference the entry in the dictionary. This will usually take one byte, and in most cases two bytes will suffice!

Since many headers are repetitive, this strategy has a very high success rate. For example, headers like :authority:www.cloudflare.com or the sometimes huge cookie headers are the usual suspects in this case. When HPACK can't match a whole header in a dictionary, it will attempt to find a header with the same name. Most of the popular header names are present in the static table, for example: content-encoding, cookie, etag. The rest are likely to be repetitive and therefore present in the dynamic table. For example, Cloudflare assigns a unique cf-ray header to each response, and while the value of this field is always different, the name can be reused! More about HPACK in this source.

From HTTP to Bitswap...

So there are essentially two main widespread compression strategies in HTTP: (i) the full body compression; (ii) and the header compression. Of course other approaches could be used like using a lossy-compression to reduce the size of images and videos as mentioned above, but for me these strategies were outside the protocol-level I was interested in. I needed a compression approach agnostic of the content (at least for now).

With this in mind I thought, “Ok! What compression approaches could we follow to minimize the amount of data exchanged between two P2P nodes, and in our case at hand, two Bitswap nodes when exchanging data”. So we evaluated the implementation of three different strategies:

Block compression: Files within Bitswap are exchanged in the form of blocks. Files are composed of several blocks organized in a DAG structure with each block having a size limit of 256KB (check out these ProtoSchool tutorials to learn a bit more about this). In this compression approach, we compress blocks before including them in a message and transmitting them through the link. This can be considered the equivalent to compressing the body in HTTP.

Full message compression: In this compression strategy instead of only compressing blocks we compress every single message before sending it, i.e. the equivalent of compressing header+body in HTTP.

Stream compression: It uses compression at a stream level, so every byte that enters the stream writer of a node at a transport level will be conveniently compressed using a stream wrapper. Actually, if you for instance have a look at this Golang implementation of a GZip HTTP handler, this is the approach they follow.

For convenience, we used GZIP as the compression algorithm for the aforementioned strategies. Many have reported the compression benefits of brotli over GZIP, but I couldn’t find a stable implementation of brotli in Golang, so in order to quickly evaluate our assumptions I had to settle for GZip for the time being --but this shouldn’t affect the conclusions we wanted to draw, “it makes sense to use compression in P2P file-sharing? And to what extent?”.

We performed some preliminary evaluations on the implementations of the aforementioned strategies over our file-sharing Testground testbed —I just realized I haven’t talked yet about Testground in this newsletter, more about this next week— and we reached these interesting results:

The rate of compression achieved using the block compression strategy is significantly lower than using the full message strategy or the stream compression. This is specially the case if we exchange random files, i.e. without a lot of redundancy. This makes total sense, even when exchanging random files, the compressor is able to find redundant structures when compressing full messages (or the stream), while blocks of a random file may in certain cases present not a bit of redundancy.

The computational overhead and the file-sharing latency using block and full message compression is significantly higher than when using stream compression. Another expected outcome: while in stream compression the node can directly “spit” the data to the transport layer as it will be compressed “on-the-go” through the stream wrapper, in the block and full message compression strategies the node has to perform the appropriate compressions before it can send the first byte to the transport layer. Apart from this, there are some other implementation details that make it more convenient using stream compression over the other two strategies, but they are not relevant for this discussion.

Lessons learned? Applying compression at a stream level can lead to potential performance improvements and a more efficient use of bandwidth compared to other “on-the-go” compression strategies in P2P networking protocols. We managed to show this over an existing file-sharing protocol such as Bitswap, but how far could we get with these results?

… and to libp2p?

I can’t stress this enough, the aim of the “Beyond Bitswap” project is to improve file-sharing in P2P networks as a whole, not only IPFS and Bitswap. To be completely honest, in the light of these results at first my level of excitement was pretty moderate, but after a few discussions with my most direct colleagues, my level of excitement went to the sky.

In the end, the compression strategy that led to the best results in our evaluations wasn’t at a protocol-level but at a transport-level. This means that if we implemented stream level compression embedded in the underlying transport layer not only file-sharing protocols would benefit from compression, but any other P2P protocol leveraging that same transport layer.

Bitswap uses lib2p as its underlying P2P transport layer, and just like Bitswap there are already a good bunch of other applications using libp2p as their transport layer that could directly start benefiting from the use of stream compression (there are implementations of libp2p in Go, JavaScript, Rust, Python, C+, and even Nim).

My idea for the next few weeks is to add a compression wrapper interface over libp2p’s MuxedStreams so that one can implement his own compression algorithm and seamlessly embed it in his libp2p streams. My aim is to try to make a compression interface proposal and have a proof-of-concept using GZip compression similar to the implementation I used for my evaluation over Bitswap.

This may take a few days, so if in the meantime you want to start using stream compression in your libp2p application here is an example of how to easily wrap a libp2p stream into a GZip compressed stream: https://gist.github.com/adlrocha/12a3746965d1b9770c01831e1feae7e4

package main

import (

"compress/gzip"

"sync"

"github.com/libp2p/go-libp2p-core/network"

"go.uber.org/multierr"

)

type compressedStream struct {

network.Stream

wlock sync.Mutex

w *gzip.Writer

r *gzip.Reader

}

func compressStream(s network.Stream) network.Stream {

return &compressedStream{Stream: s, w:

gzip.NewWriter(s)}

}

func (c *compressedStream) Write(b []byte) (int, error) {

c.wlock.Lock()

defer c.wlock.Unlock()

n, err := c.w.Write(b)

return n, multierr.Combine(err, c.w.Flush())

}

func (c *compressedStream) Read(b []byte) (int, error) {

if c.r == nil {

// This _needs_ to be lazy as it reads a header.

var err error

c.r, err = gzip.NewReader(c.Stream)

if err != nil {

return 0, err

}

}

n, err := c.r.Read(b)

if err != nil {

c.r.Close()

}

return n, err

}

func (c *compressedStream) Close() error {

c.wlock.Lock()

defer c.wlock.Unlock()

return multierr.Combine(c.w.Close(), c.Stream.Close())

}Special thanks to Stebalien for helping me figure out how to wrap compression in a stream, this work wouldn’t have been possible without his invaluable insight.

Something to add? Comments, Github issues, and Twitter inquires are open :) See you next week!